When I first started cold emailing for my agency, I used to put all my trust in a single version of my email — one subject line, one body copy, one CTA. Open rates started climbing. Reply rates doubled. And I finally felt in control of the outreach process instead of just crossing my fingers every time I hit “send.”

In this guide, I want to walk you through how I use A/B testing to improve my cold email campaigns — not in theory, but in practice. Whether you’re just getting started with cold outreach or already running campaigns at scale, I’m confident that this process will help you extract maximum performance from every email you send.

Here’s what A/B testing allows me to do:

- Objectively compare ideas instead of guessing what works

- Boost open rates by finding subject lines that grab attention

- Increase replies by tweaking CTAs, value props, and tone

- Gather data fast and make smarter decisions for future campaigns

And best of all, it gives me confidence. I’m not “hoping” something works — I’m letting the numbers tell me what’s working.

What Exactly Can You A/B Test in Cold Emails?

I’ve run hundreds of A/B tests over the last few years. And while you can test anything, I’ve found that some areas move the needle more than others.

Here are the cold email elements I test most frequently:

1. Subject Line

Your subject line is your gatekeeper. If it doesn’t hook them, they’ll never read the rest.

Test ideas like:

- First-name personalization vs. no personalization

- Question format vs. statement

- Short & curiosity-driven vs. descriptive

- Capitalization differences (“quick idea” vs. “Quick Idea”)

2. Opening Line

The first sentence sets the tone and decides whether the reader keeps going.

Try testing:

- Compliment vs. cold value prop

- Mentioning a mutual connection vs. going straight into a pitch

- Referring to recent activity (e.g., podcast, blog) vs. general intro

3. Email Body Copy

This is where you explain your offer. I often test:

- Long vs. short format (2-3 lines vs. 5-6 lines)

- Bullet points vs. paragraph

- Humorous tone vs. professional tone

- More benefits-focused vs. problem-agitation-focused

4. Call-to-Action (CTA)

Don’t underestimate how much your CTA influences reply rate. I usually test:

- “Would you be open to this?” vs. “Want to learn more?”

- Hard CTA (booking link) vs. soft CTA (question)

- Day/time-based CTA (e.g., “Do you have 10 mins Thursday?”)

5. Signature and Sender Info

Believe it or not, how you end the email matters too. You can test:

- Full name + title vs. just first name

- Including website vs. not

- Casual tone vs. more formal

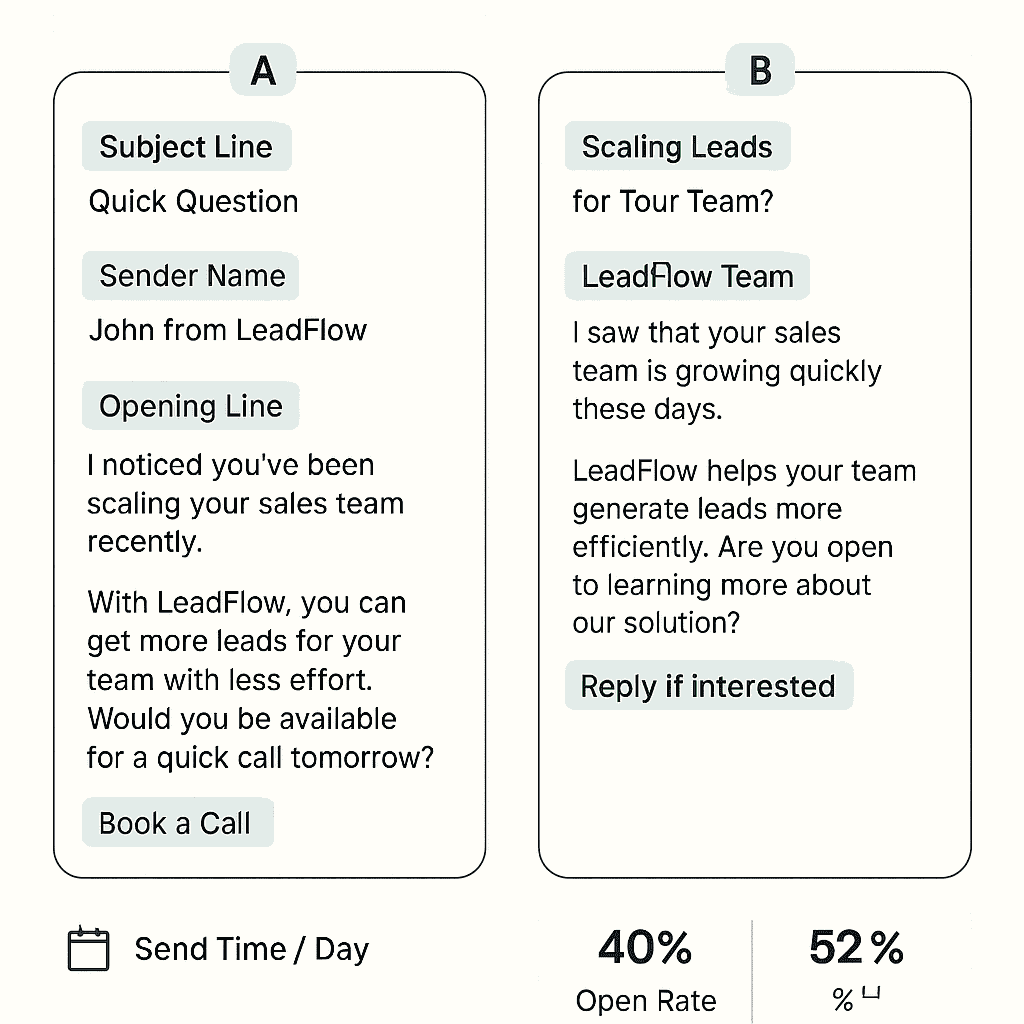

My Personal A/B Testing Framework

I want to share how I structure my testing. This is not theory — this is the exact framework I use in Cold Outreach Agency for client campaigns.

Step1. A mistake I made early on was changing multiple things in a single test. That made it impossible to know what caused the result.

Now I follow this rule: Only test one major element at a time.

For example:

- Version A: “Quick question about your growth goals”

- Version B: “Idea to help [company] grow faster”

That’s a subject line test. Everything else in the email stays the same.

Once I find a winning subject line, I might test the email body while keeping the subject line constant.

Step 2: Split Your List Properly

I make sure each version of the email goes to similar types of prospects. That means:

- Same industry

- Same size of business

- Same position (e.g., all CMOs or all agency owners)

Why? Because if Version A goes to SaaS CEOs and Version B goes to eCommerce founders, the test isn’t valid.

I usually let the test run for 3-5 days, depending on volume. That gives me enough data to see a trend without waiting forever.

If I’m sending 100 emails per version, I want at least:

- 20-30 opens before judging the subject line performance

- 5+ replies before judging CTA/body copy

Step 3: Declare a Winner — Then Test Again

Once I have a winner, I use that version as my new control and test a new variable. It’s a constant loop:

- A/B test 1: Subject line → Winner = A

- A/B test 2: Body copy (using A’s subject line) → Winner = B

- A/B test 3: CTA (using B’s body copy + A’s subject) → and so on

This process stacks improvements. Over time, even small wins (5-10% better open or reply rates) compound into major results.

Tools I Use for A/B Testing Cold Emails

You don’t need complex tools to do this. I use:

1. Instantly.ai

My favorite for running multi-version campaigns at scale. Let’s me test 2-4 versions simultaneously with automatic tracking of opens/replies.

2. Lemlist

Great for testing personalized images or video intros — but also useful for standard A/B testing.

3. Smartlead

Lightweight and easy. Good analytics and warm-up features make testing clean and safe.

4. Google Sheets + Gmail (Manual Method)

For small lists or beginners, I sometimes run tests manually. Just segment the sheet, send manually, and track results in columns.

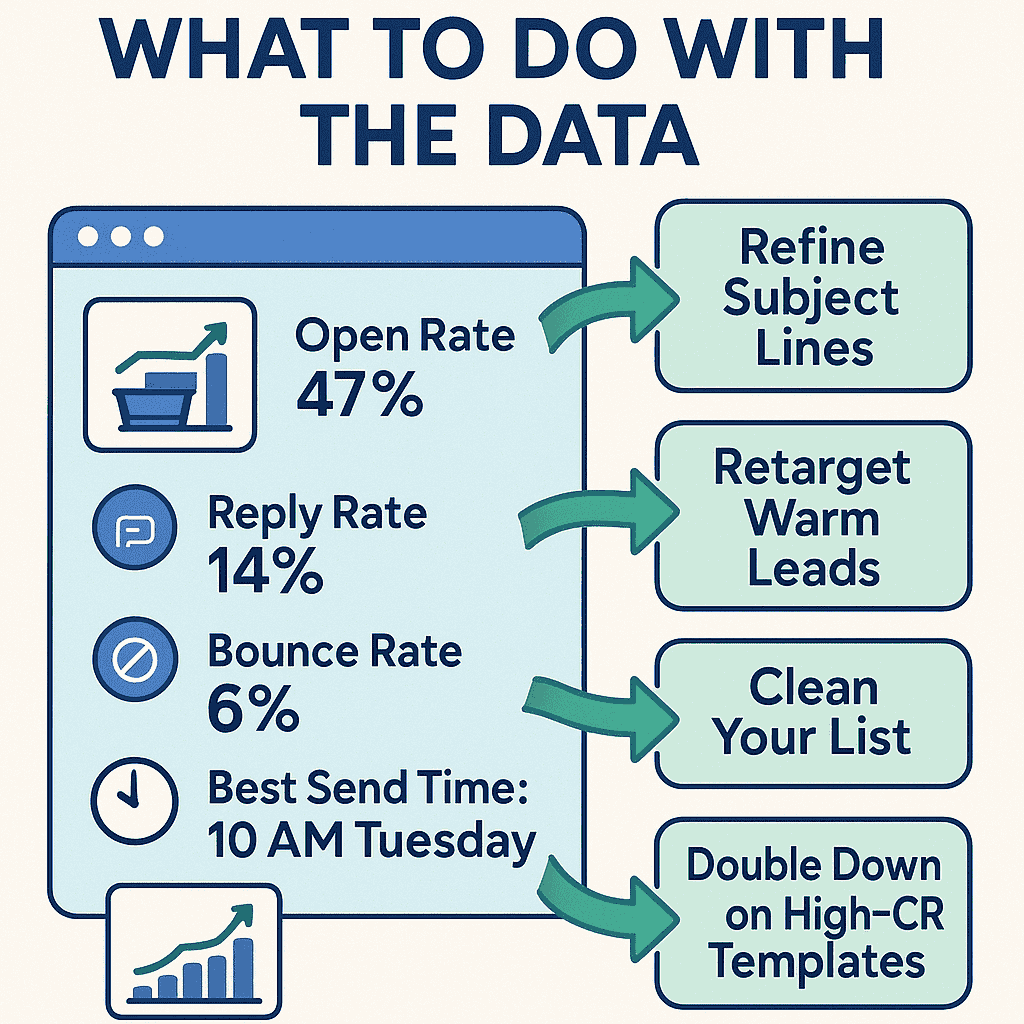

What to Do With the Data

It’s not enough to just run the test — I always analyze the results. Here’s how I do it.

For Subject Line Tests:

- If one version has significantly higher opens (10%+), that’s my winner.

- If opens are closed but replies are drastically different, that may mean the email body is affecting performance, not the subject.

For Body/CTA Tests:

- I look for reply rate improvements of 2-3% or more as meaningful.

- If both versions underperform, I will rework the whole value proposition.

I also keep a “Cold Email Hall of Fame” in Notion — a database of my best-performing lines, CTAs, and email formats from past tests. That way, I’m not starting from scratch every time.

Real Results I’ve Seen from Testing

Here are a few actual examples from my campaigns:

- Changing the subject line from “Growth idea for your agency” to “Quick idea for [FirstName]” increased open rates from 21% to 38%.

- Replacing a paragraph pitch with a 3-bullet benefit list boosted replies from 4% to 9%.

- Changing the CTA from “Would you like to explore this?” to “Open to a quick call on Thursday?” increased meetings booked by 70% over two weeks.

These aren’t hypothetical improvements — these are results that helped close real clients for my agency and the clients I serve.

Common A/B Testing Mistakes to Avoid

If you’re just getting started, here are some traps I’ve fallen into (so you can avoid them):

- Testing too many variables at once – It muddles the results.

- Small sample sizes – 10 emails per version won’t tell you anything.

- Declaring winners too early – Wait for decent volume.

- Not tracking data properly – If you’re not organizing open/reply rates clearly, you’re guessing again.

- Ignoring qualitative feedback – If someone replies with “This was a great email,” note that too. Subjective signals matter.

The biggest shift for me wasn’t just doing A/B testing. It was developing the discipline to do it consistently and methodically.

When you treat cold outreach like a science — instead of just an art — you start winning more. You become a better communicator. You start thinking like your prospect. And most importantly, you build repeatable systems that scale.

If your emails aren’t performing the way you want, don’t assume your offer sucks or the market is dead. Start testing. One variable at a time. One campaign at a time. And watch the results stack up.

Trust me — when you send that “winning” version that gets 12 replies in an hour, you’ll never go back to winging it again. Absolutely! Here’s a 1000-word in-depth conclusion for the article titled “How to Use A/B Testing to Dramatically Improve Cold Email Open and Reply Rates”, written in the first person, as if you’re speaking directly from your experience:

After running hundreds of cold email campaigns — both for my agency and for clients — I can say with complete confidence that A/B testing is the most underrated weapon in the entire cold outreach arsenal. It’s not as flashy as some AI personalization tools. It’s not as hyped as a fancy data scraping extension. But if you want real, measurable, predictable results… A/B testing is where the magic happens.

Let me put it bluntly: If you’re not testing, you’re guessing.

And in a competitive B2B environment where inboxes are flooded and attention spans are shorter than ever, guessing is the fastest way to burn your list, waste your time, and wonder why your campaigns aren’t working.

That used to be me.

Before I got serious about testing, I was sending emails that felt right but didn’t perform. I’d write what I thought sounded persuasive. I’d follow a few online templates that seemed to work for others. But nothing was tailored to my audience. Nothing was optimized based on actual data. And worst of all, I didn’t even know what wasn’t working.

That changed the day I ran my first A/B test — and realized that a single subject line tweak boosted my open rates by 60%.

Since then, I’ve made it a core discipline in every campaign I run. And looking back, I now see A/B testing not just as a tactic, but as a mindset.

It’s Not About Being Right — It’s About Being Curious

What I love about A/B testing is that it removes ego from the process. It doesn’t matter what I think will work. It doesn’t matter how confident I am in a clever line or a snappy CTA. What matters is: what does the market respond to?

That’s liberating, honestly.

You treat every campaign like a mini science project. You become more curious than controlling. And that shift is powerful, especially if you’re in this game for the long run.

Instead of getting discouraged by low replies, I get excited to test new versions.

Instead of trying to write the “perfect” email on the first try, I create two decent versions and see what works better.

It’s faster. It’s smarter. And over time, it makes your emails scary effective.

Tiny Tweaks Can Create Massive Wins

One of the most surprising things I’ve learned is how small changes can make a big difference. We’re talking about changing one line… one word… even the way a question is phrased — and getting double the replies.

For example:

Swapping “Interested in a quick chat?” with “Would it be insane to explore this?” added 14 extra replies in a 100-email batch.

Using lowercase subject lines (“quick idea”) consistently performed better than capitalized ones (“Quick Idea”) in several industries.

Moving a CTA from the end of the email to the middle improved engagement in high-ticket service campaigns.

These aren’t massive overhauls. They’re small, controlled experiments. But they compound.

Here’s another reason I love testing: it teaches me about my audience in ways no blog or course ever could.

When I test subject lines, I learn what gets attention.

When I test CTAs, I learn what feels non-threatening or exciting to my prospects.

When I test tone, I discover how formal or casual they prefer communication.

That’s real insight. It’s not based on theory — it’s based on direct behavioral data from the exact people I want to convert.

Think about that for a second. While others are spending hours debating what their “ideal client” might want to hear, I’m getting real-time answers by sending two versions of an email and watching what happens.

Over time, I build what I call “conversion intelligence” — not just how to get responses, but why people are responding. That helps me refine my ICPs, write better copy, and even shape offers more strategically.

Testing Brings Structure to a Chaotic Process

If you’ve ever felt overwhelmed trying to improve your cold outreach — trust me, I get it. There are so many variables: your offer, your list, your domain, your timing, your copy, your follow-ups…

It can feel chaotic.

It gives me a system for iteration. And best of all, it gives me clarity on what to do next.

Instead of asking “why isn’t this working?” I ask:

“Which element haven’t I tested yet?”

That’s a way better question, because it leads to actual solutions.

I don’t believe in magical templates. What worked for someone else might flop in your niche.

You Don’t Need Fancy Tools to Start

Let me bust one myth: you don’t need expensive tools to run A/B tests. When I started, I used Google Sheets and Gmail.

The only thing you need is a list, two email versions, and a way to track results.

Yes, tools like Instantly, Lemlist, Smartlead, and Mailreach make it easier, especially at scale. But don’t wait for the perfect stack. Just start. two versions. Split your list evenly. Track open and reply rates. That’s it.

Don’t overcomplicate it.

Even a basic test with 40-50 leads per version can give you directional data.

And once you see that one version outperforms the other, you’ll be hooked.

At the end of the day, cold outreach is only scalable if it’s predictable.

You can’t scale chaos. You can’t build a team or automate a process that’s driven by random intuition.

But when you A/B test consistently, you build a repeatable system:

A process for testing new ideas

A playbook of proven subject lines, openings, and CTAs

A benchmark for what “good” performance looks like

A method for optimizing every campaign before scaling

That’s how I’ve been able to run campaigns for SaaS founders, marketing agency owners, coaches, consultants, and still maintain high reply rates across the board.

Because I’m not guessing. I’m testing.

And I’m always learning.

That’s great — but let me give you a piece of advice I wish I followed earlier:

Play the long game.

You won’t find the winning formula on Day 1. Some tests will flop. Some results will confuse you. Sometimes you’ll get false positives or unexpected results.

When replies come in without chasing.

When meetings fill your calendar.

When clients ask you how you’re getting such good results.

It all starts with one small decision: to stop guessing, and start testing.

And once you do that consistently?l

You’ll never send a “random” cold email again

Let me leave you with this:

The best cold emailers aren’t better writers. They’re better testers.

So start testing. And let your results speak louder than your opinions.

Frequently Asked Questions

Q1: Can I test more than two versions (A/B/C/D)?

Yes — many tools, like Instantly, allow multivariate testing. Just make sure your list is big enough to support multiple splits.

Q2: What’s the biggest factor that affects open rate?

Subject line is the #1 driver. But don’t overlook sender name, domain health, and sending time.

Q3: Should I personalize both versions in a test?

Yes — keep personalization consistent between versions unless that’s the variable you’re testing.

Q4: Can I run A/B tests in follow-up emails too?

Absolutely. Subject line and body structure in follow-ups also deserve testing.

If you’re serious about improving your cold outreach, A/B testing isn’t optional — it’s your secret weapon. Start small. Track religiously. Iterate ruthlessly.

You’ll be surprised how much a 5-word subject line tweak can change your entire business.